People

Academic

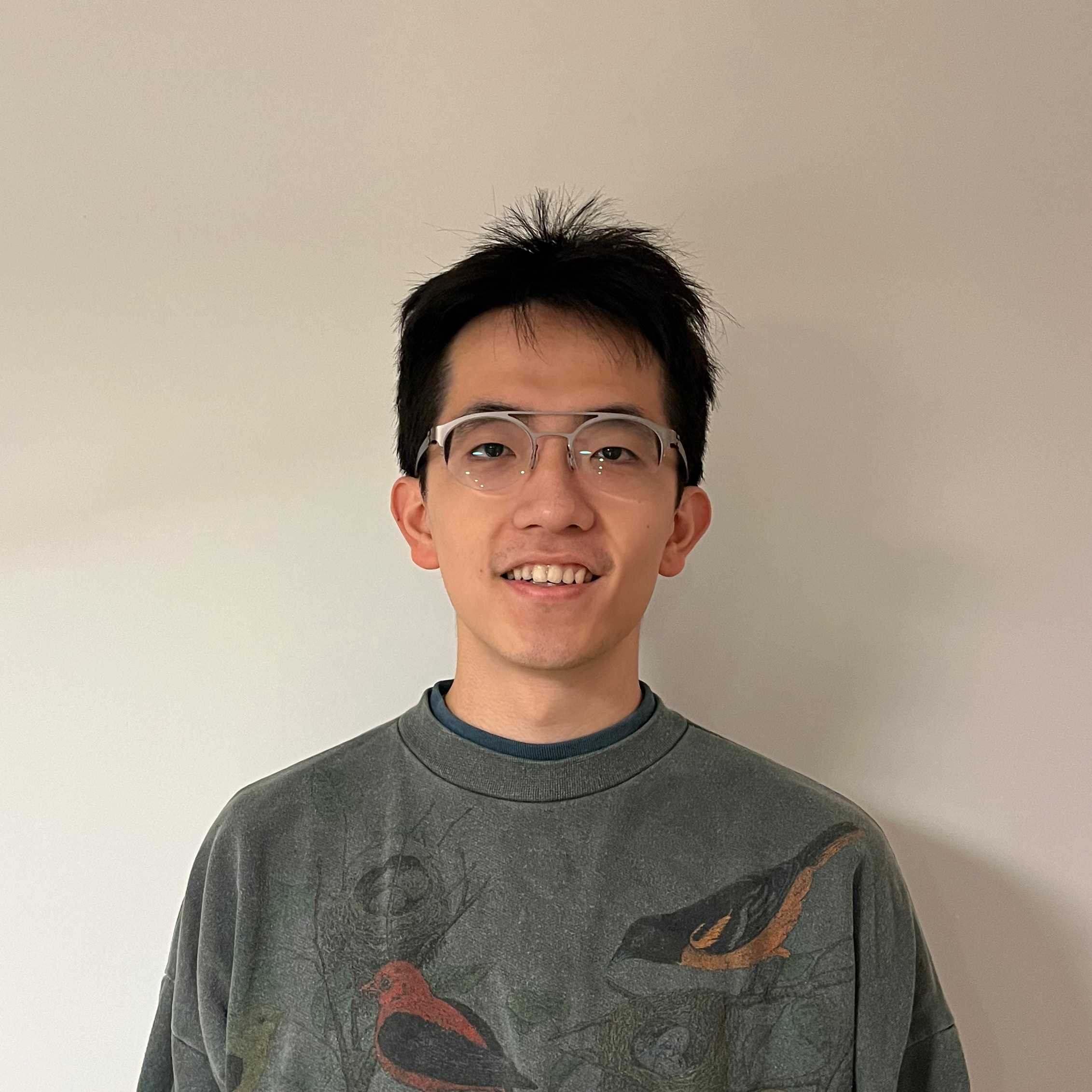

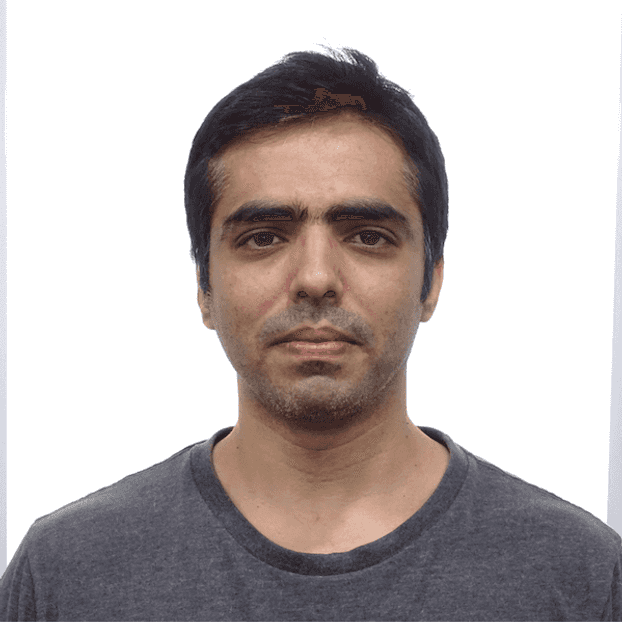

Dr Aidan Hogg

Lecturer in Computer Science

56291

spatial and immersive audio, music signal processing, machine learning for audio, music information retrieval

Dr Anna Xambó

Senior Lecturer in Sound and Music Computing

41762

new interfaces for musical expression, performance study, human-computer interaction, interaction design

Dr Charalampos Saitis

Lecturer in Digital Music Processing

46798

Communication acoustics, crossmodal correspondences, sound synthesis, cognitive audio, musical haptics

Dr Emmanouil Benetos

Reader in Machine Listening

27310

Machine listening / computer audition, Machine learning for audio and sequential data, Music information retrieval, Multimodal AI, Resource-efficient AI

Dr George Fazekas

Senior Lecturer

27055

Semantic Audio, Music Information Retrieval, Semantic Web for Music, Machine Learning and Data Science, Music Emotion Recognition, Interactive music sytems (e.g. intellignet editing, audio production and performance systems)

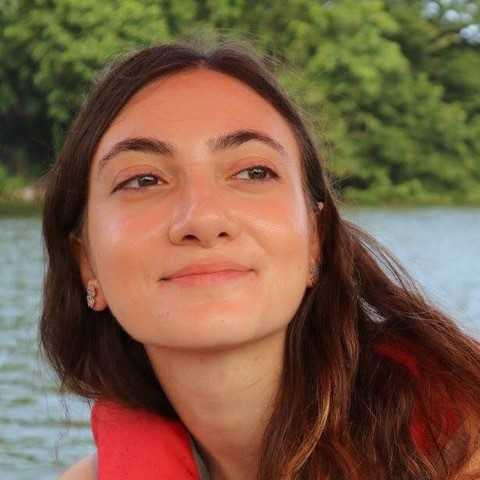

Dr Iran Roman

Lecturer in Sound and Music Computing

57815

theoretical neuroscience, machine perception, artificial intelligence

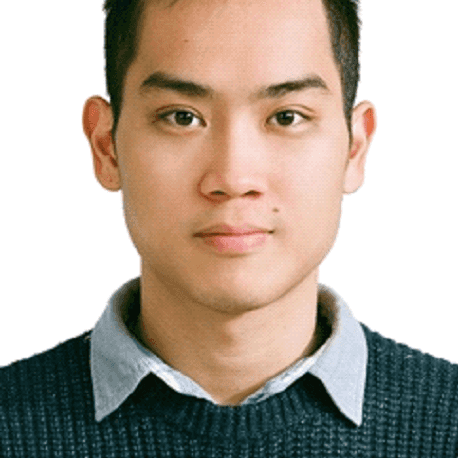

Dr Johan Pauwels

Lecturer in Audio Signal Processing

38282

automatic music labelling, music information retrieval, music signal processing, machine learning for audio, chord/key/structure (joint) estimation, instrument identification, multi-track/channel audio, music transcription, graphical models, big data science

Dr Lin Wang

Lecturer in Applied Data Science and Signal Processing

33238

signal processing; machine learning; robot perception

Dr Mathieu Barthet

Senior Lecturer in Digital Media

25194

Music information research, Internet of musical things, Extended reality, New interfaces for musical expression, Semantic audio, Music perception (timbre, emotions), Audience-Performer interaction, Participatory art

Dr Tony Stockman

Senior Lecturer

19464

Interaction Design, auditory displays, Data Sonification, Collaborative Systems, Cross-modal Interaction, Assistive Technology, Accessibility

Prof Andrew McPherson

Professor of Musical Interaction

29093

new interfaces for musical expression, augmented instruments, performance study, human-computer interaction, embedded hardware

Prof Joshua D Reiss

Professor of Audio Engineering

19433

sound engineering, intelligent audio production, sound synthesis, audio effects, automatic mixing

Prof Mark Sandler

C4DM Director

19989

Digital Signal Processing, Digital Audio, Music Informatics, Audio Features, Semantic Audio, Immersive Audio, Studio Science, Music Data Science, Music Linked Data.

Prof Simon Dixon

Professor of Computer Science, Deputy Director of C4DM, Director of the AIM CDT

21674

Music informatics, music signal processing, artificial intelligence, music cognition; extraction of musical content (e.g. rhythm, harmony, intonation) from audio signals: beat tracking, audio alignment, chord and note transcription, singing intonation; using signal processing approaches, probabilistic models, and deep learning.

Academic Associate

Dr Marcus Pearce

Reader in Cognitive Science

Music Cognition, Auditory Perception, Empirical Aesthetics, Statistical Learning, Probabilistic Modelling.

Prof Geraint Wiggins

Professor of Computational Creativity

Computational Creativity, Artificial Intelligence, Music Cognition

Prof Matthew Purver

Professor of Computational Linguistics

computational linguistics including models of language and music

Prof Pat Healey

Professor of Human Interaction

human interaction, human communication

PhD

Adam Andrew Garrow

PhD Student

Probabilistic learning of sequential structures in music cognition

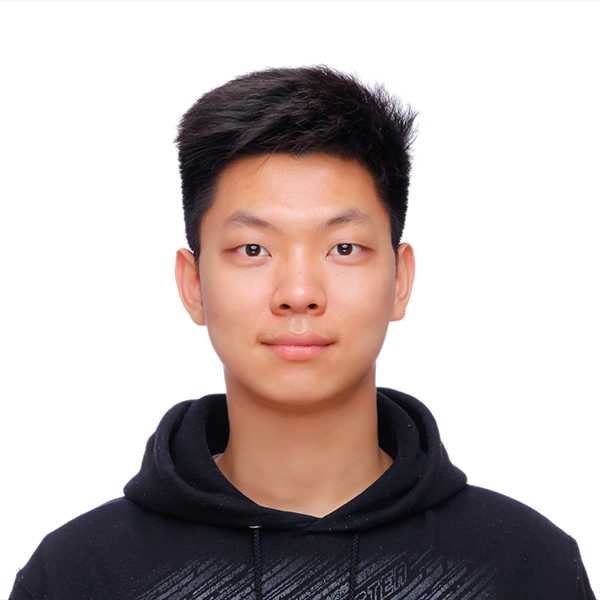

Adam He

PhD Student

Neuro-evolved Heuristics for Meta-composition

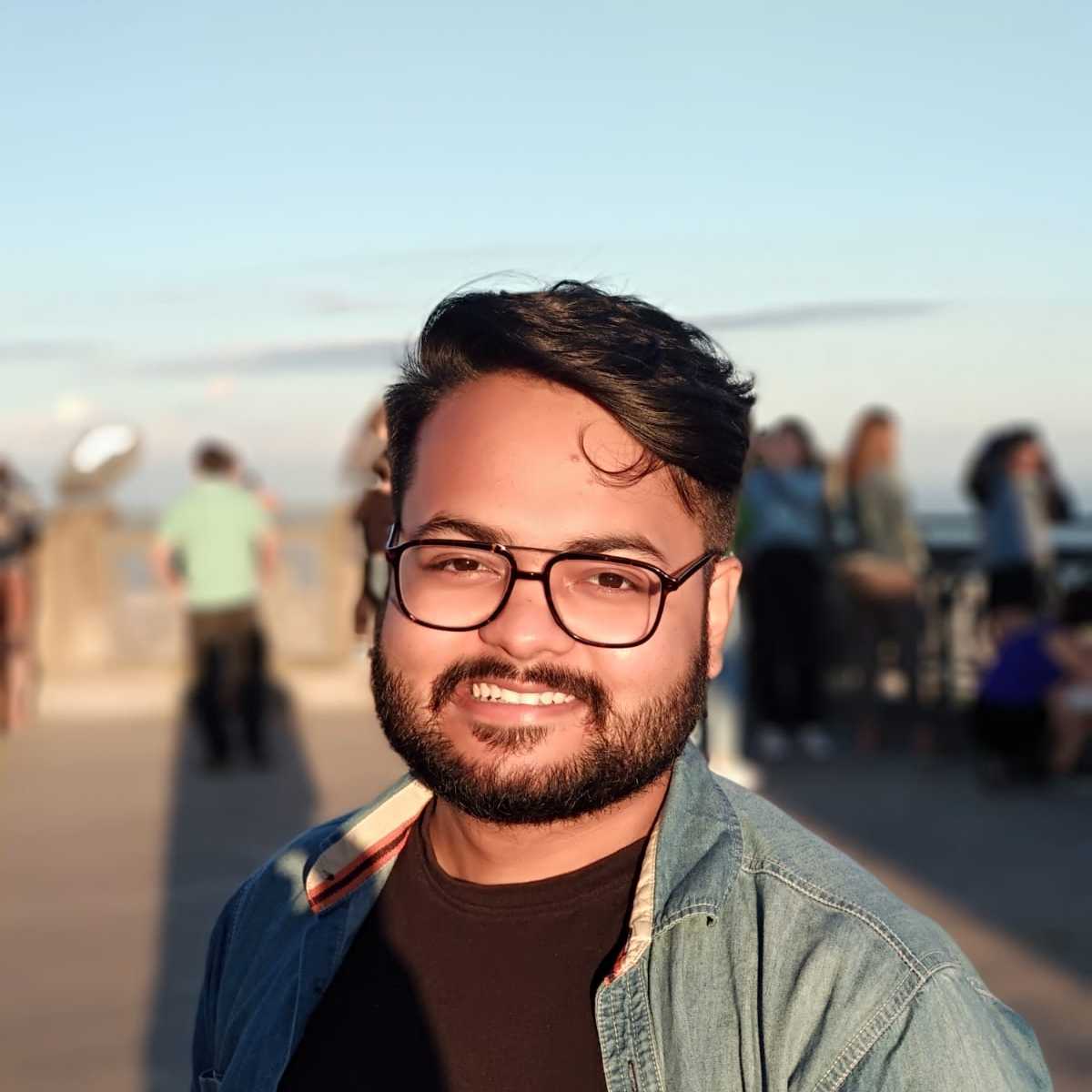

Aditya Bhattacharjee

PhD Student

Self-supervision in Audio Fingerprinting

Adán Benito

PhD Student

Beyond the fret: gesture analysis on fretted instruments and its applications to instrument augmentation

Alexander Williams

PhD Student

User-driven deep music generation in digital audio workstations

Andrea Martelloni

PhD Student

Real-Time Gesture Classification on an Augmented Acoustic Guitar using Deep Learning to Improve Extended-Range and Percussive Solo Playing

Andrew (Drew) Edwards

PhD Student

Deep Learning for Jazz Piano: Transcription + Generative Modeling

Antonella Torrisi

PhD Student

Computational analysis of chick vocalisations: from categorisation to live feedback

Ashley Noel-Hirst

PhD Student

Latent Spaces for Human-AI music generation

Ben Hayes

PhD Student

Differentiable Digital Signal Processing, Audio Synthesis, and Symmetry

Bleiz Del Sette

PhD Student

The Sound of Care: researching the use of Deep Learning and Sonification for the daily support of people with Chronic Primary Pain

Bradley Aldous

PhD Student

Enabling Efficient Training and Inference of Large Audio Models

Carey Bunks

PhD Student

Most people are familiar with Shazam, the app that recognizes recorded music using audio fingerprinting. It provides the title, artist, and other information from the audio of a song, as provided by the publisher. Shazam does not, however, work for live music. The topic of this thesis is song title recognition from live jazz.

Carlos De La Vega Martin

PhD Student

Neural Drum Synthesis

Chin-Yun Yu

PhD Student

Neural Audio Synthesis with Expressiveness Control

Chris Winnard

PhD Student

Music Interestingness in the Brain

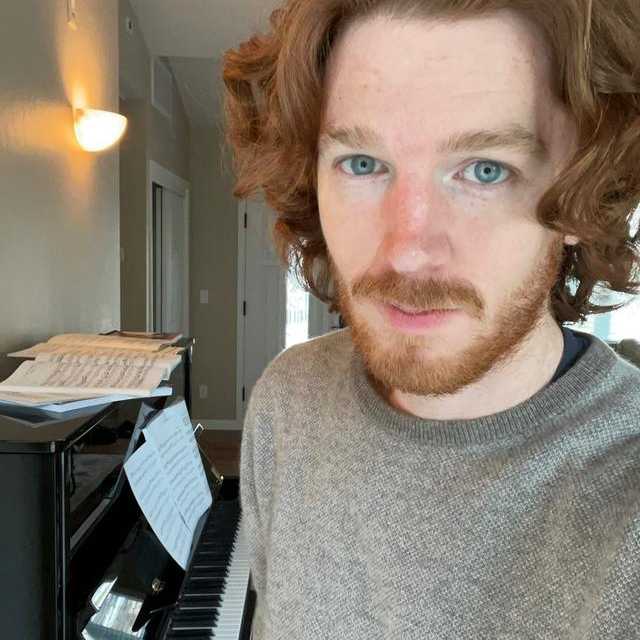

Christian Steinmetz

PhD Student

End-to-end generative modeling of multitrack mixing with non-parallel data and adversarial networks

Christopher Mitcheltree

PhD Student

Deep Learning for Time-varying Audio Systems and Spectrotemporal Modulations

Christos Plachouras

PhD Student

Deep learning for low-resource music

Cyrus Vahidi

PhD Student

Perceptual end to end learning for music understanding

David Foster

PhD Student

Modelling the Creative Process of Jazz Improvisation

David Marttila (Südholt)

PhD Student

Machine Learning of Physical Models for Voice Synthesis

Eleanor Row

PhD Student

Automatic micro-composition for professional/novice composers using generative models as creativity support tools

Elona Shatri

PhD Student

Optical music recognition using deep learning

Farida Yusuf

PhD Student

Neural computing for auditory object analysis

Franco Caspe

PhD Student

AI Timbre Transfer for Low-Latency Musical Interaction

Gabryel Mason-Williams

PhD Student

Artificial Neuroscience: Applying Mathematics to the Understanding and Control of Deep Learning

Gregor Meehan

PhD Student

Representation learning for musical audio using graph neural network-based recommender engines

Haokun Tian

PhD Student

Timbre Tools for the Digital Instrument Maker

Harnick Khera

PhD Student

Informed source separation for multi-mic production

Huan Zhang

PhD Student

Computational Modelling of Expressive Piano Performance

Iacopo Ghinassi

PhD Student

Semantic understanding of TV programme content and structure to enable automatic enhancement and adjustment

Ilaria Manco

PhD Student

Multimodal Deep Learning for Music Information Retrieval

Jackson Loth

PhD Student

Time to vibe together: cloud-based guitar and intelligent agent

James Bolt

PhD Student

Intelligent audio and music editing with deep learning

Jiawen Huang

PhD Student

Lyrics Alignment For Polyphonic Music

Jingjing Tang

PhD Student

Deep Generative Modelling for Expressive Piano Performance: From Symbolic Interpretation to Audio Realisation

Jinhua Liang

PhD Student

Everyday Sound Recognition with Limited Annotations

Jinwen Zhou

PhD Student

Combining Deep Learning and Music Theory

Jordie Shier

PhD Student

Real-time timbral mapping for synthesized percussive performance

Julien Guinot

PhD Student

Beyond Supervised Learning for Musical Audio

Katarzyna Adamska

PhD Student

Predicting hit songs: multimodal and data-driven approach

Keshav Bhandari

PhD Student

Neuro-Symbolic Automated Music Composition

Lele Liu

PhD Student

Automatic audio-to-score piano transcription with deep neural networks

Lewis Wolstanholme

PhD Student

Meta-Physical Modelling

Louis Bradshaw

PhD Student

Neuro-symbolic music models

Luca Marinelli

PhD Student

Gender-Coded Sound: A multimodal analysis of gender encoding strategies in music for advertising

Madeline Hamilton

PhD Student

Perception and analysis of 20th and 21st century popular music

Marco Comunità

PhD Student

Machine learning applied to sound synthesis models

Marco Pasini

PhD Student

Fast and Controllable Music Generation

Mary Pilataki

PhD Student

Deep learning methods for multi-instrument music transcription

Max Graf

PhD Student

When XR Meets AI: Machine Learning for Gesture Control Mechanisms in XR Musical Instruments

Minhui Lu

PhD Student

Applications of deep learning for improved synthesis of engine sounds

Nelly Garcia

PhD Student

An investigation evaluating realism in sound design

Ningzhi Wang

PhD Student

Generative Models For Music Audio Representation And Understanding

Oluremi Falowo

PhD Student

E-AIM - Embodied Cognition in Intelligent Musical Systems

Pablo Tablas De Paula

PhD Student

Machine Learning of Physical Models

Qiaoxi Zhang

PhD Student

Multimodal AI for musical collaboration in immersive environments

Qing Wang

PhD Student

Multi-modal Learning for Music Understanding

Rodrigo Mauricio Diaz Fernandez

PhD Student

Hybrid Neural Methods for Sound Synthesis

Ruby Crocker

PhD Student

Continuous mood recognition in film music

Sara Cardinale

PhD Student

Character-based adaptive generative music for film and video games using Deep Learning and Hidden Markov Models

Shahar Elisha

PhD Student

Style classification of podcasts using audio

Shangxuan Luo

PhD Student

Adaptive Music Generation for Video Game

Shubhr Singh

PhD Student

Audio Applications of Novel Mathematical Methods in Deep Learning

Shuoyang Zheng

PhD Student

Explainability of AI Music Generation

Soumya Sai Vanka

PhD Student

User-Centric Intelligent Context-Aware Multitrack Music Mixing

Teodoro Dannemann

PhD Student

Sabotaging, errors and other mistakes as a source of new techniques in music improvisation

Teresa Pelinski

PhD Student

Sensor mesh as performance interface

Tyler Howard McIntosh

PhD Student

Expressive Performance Rendering for Music Generation Systems

Vjosa Preniqi

PhD Student

Predicting demographics, personalities, and global values from digital media behaviours

Xavi D'Cruz

PhD Student

Machine Learning of Physical Models

Xavier Riley

PhD Student

Pitch tracking for music applications - beyond 99% accuracy

Xiaojing Liu

PhD Student

Automatic Mixing for teleconferencing, gaming and live streaming

Xiaowan Yi

PhD Student

Composition-aware music recommendation system for music production

Yannis (John) Vasilakis

PhD Student

Towards multilabel classification with joint audio-text models

Yazhou Li

PhD Student

Virtual Placement of Objects in Acoustic Scenes

Yifan Xie

PhD Student

Film score composer AI assistant: generating expressive mockups

Yin-Jyun Luo

PhD Student

Industry-scale Machine Listening for Music and Audio Data

Yinghao Ma

PhD Candidate

Large Language Models for Music

Yisu Zong

PhD Student

Machine learning for physical models of sound synthesis

Yixiao Zhang

PhD Student

Improving Controllability and Editability for Pretrained Text-to-Music Generation Models

Yorgos Velissaridis

PhD Student

Personalizing Audio with Context-aware AI using Listener Preferences and Psychological Factors

Zixun Nicolas Guo

PhD Student

Multimodal Foundational Models for Music

Postdoc

Dr Ken O'Hanlon

Postdoctoral Researcher

Project: Fine-grained music source separation with deep learning models

Dr Luigi Marino

Research Fellow in Sound and Music Computing

Networks able to display relationships between human and nonhuman actors. Project: Sensing the Forest.

Research Assistant

Ivan Meresman Higgs

Research Assistant

Sample Identification in Mastered Songs using Deep Learning Methods

Jaza Syed

Research Assistant

Audio ML, Automatic Lyrics Transcription

Marikaiti Primenta

Research Assistant

Project Maestro - AI Musical Analysis Platform

Sebastian Löbbers

Research Assistant

UKRI Centre for Doctoral Training in AI and Music

Sungkyun Chang

Research Assistant

Music Performance Assessment and Feedback

Support

Alvaro Bort

Research Programme Manager

Projects: UKRI Centre for Doctoral Training in Artificial Intelligence and Music, New Frontiers in Music Information Processing (MIP-Frontiers)

Name

Academic Position

description