The British Machine Vision Association and Society for Pattern Recognition (BMVA) Workshop on Multimodal Large Models Bridging Vision, Language, and Beyond was held at British Computer Society (BCS), 25 Copthall Avenue, London EC2R 7BP on November 5th, 2025.

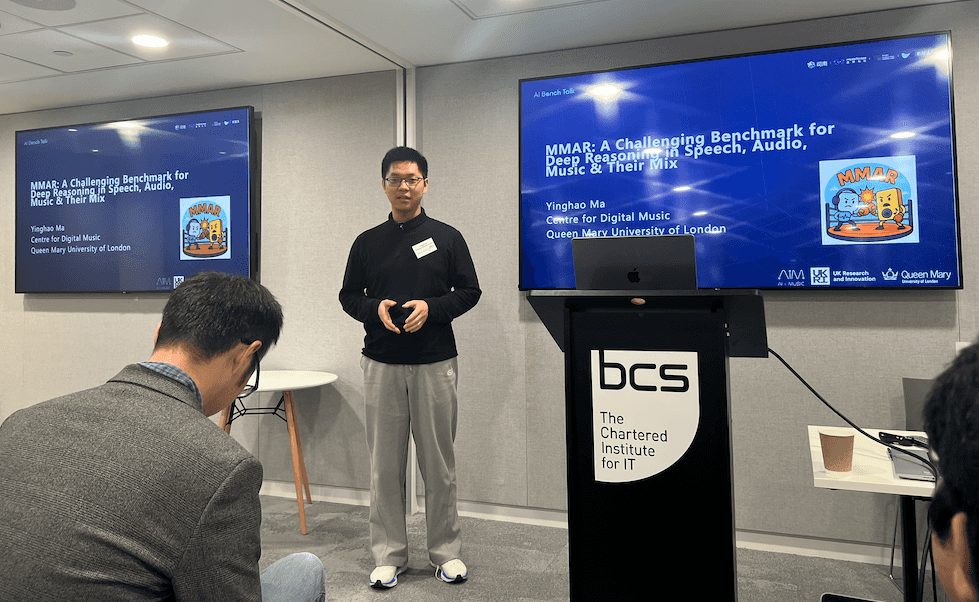

Among the selected oral presentations, C4DM PhD student Yinghao Ma (supervised by Prof. Emmanouil Benetos) presented his latest work MMAR: A Challenging Benchmark for Deep Reasoning in Speech, Audio, Music, and Their Mix. MMAR is a newly released benchmark that spans 1,000 real-world audio reasoning tasks, covering speech, sound events, music, and mixed-modality scenarios. It is one of the first benchmarks to explicitly evaluate multi-step reasoning abilities in audio-language and omni-modal large models, with tasks ranging from low-level signal perception to high-level cultural understanding.

The talk was part of the “Domain Applications and Human-Centric Modalities” session, alongside research on sign language translation, visual illusions, and 3D-aware facial editing. MMAR attracted interest from both academia and industry attendees, especially as multimodal reasoning becomes a key focus in the next wave of AI foundation models.

The BMVA workshop featured keynote speakers from Google DeepMind, UCL, and the University of Surrey, and brought together researchers advancing the frontier of multimodal intelligence across vision, language, audio, and embodied learning.

MMAR is open-source and available on arXiv: arXiv:2505.13032. The video recording of presentation is available at here